Hello fellow blog readers. This is to inform you that I have officially left Hinge Digital and started working as a 3D Lead at Nike designing shoes, apparel, and accessories. This campus is incredible! I will be doing everything-modeling, sculpting, rigging, animating, texturing, lighting, rendering, and concepting.

SubscribeBlog

IrayForMaya Foodscape Breakdown Tutorial

Hey guys, I am finally posting the breakdown video I did while at Hinge using the IrayForMaya plugin on the Foodscape project.

This was rendered using Iray in Maya.

Nvidia Iray Foodscape

Hey everyone! We have finished out foodscape project using nvidia’s Iray and 0x1’s plugin for Maya. You can check out my in-depth, in-detail breakdown over at Hinge DIgital’s website here:

http://hingedigital.com/hingeblog/hinge-teams-with-nvidia-and-0x1-to-create-foodscape/

Here is the finished video of it:

NVIDIA Iray “Foodscape” from Hinge Digital on Vimeo.

There will also be a screen capture making of and tutorial coming out in the future. So keep your eyes peeled!

A Universe of Possibilities

Hinge Digital’s latest Spot. Amazing working, Andrew Nawrot!

Jamba Juice “A Universe of Possibilities” from Hinge Digital on Vimeo.

3d Artist Magazine Issue # 61

Valspar Chameleon

This is a little test that I had worked on for the Valspar Chameleon. What a cute little guy! Everything is CG and was rendered in Maya using Arnold. This was the first scene that I rendered in Arnold. No teapots for this guy! The render 2880X1620, so make sure you click on it, so you can see all of the detail.

NW Natural Breakdown

Here is the promised breakdown for the NW Natural Eggs as mentioned in a previous post..

The workflow for me for this project:

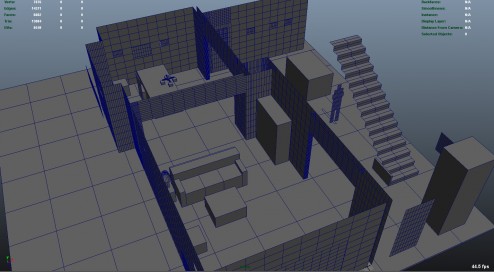

1.) I went on set and took tons and tons of distance measurements. I measured everything I could down to 1/4″ (1/8th” for smaller objects). The purpose of doing this is to recreate the set in 3D. We want to create the set in 3D to make sure the character sizes are correct, that they are the correct distance away from camera, and in the correct space to interact with the real objects around them. We also use the geometry of the set to project the HDRI lighting onto the characters to get correct spatial luminance as opposed to lighting with an infinite ibl. The quick blocked out version looks something like this:

This screen grab is in the middle stage. The key is to keep things clean and simple. Add detail later. As you can see a lot of the walls have way more geometry than they need to. I cleaned this up once I finished the first pass. I started with one 10X10 plane and duplicated it over and over to create all the walls, that way I can visually see the size ratios with the 10X10 grid differences between walls and make sure things were lining up correctly.

This screen grab is in the middle stage. The key is to keep things clean and simple. Add detail later. As you can see a lot of the walls have way more geometry than they need to. I cleaned this up once I finished the first pass. I started with one 10X10 plane and duplicated it over and over to create all the walls, that way I can visually see the size ratios with the 10X10 grid differences between walls and make sure things were lining up correctly.

2.) The second stage for me was hdri creation. We took a dynamic range of 6 exposures per shot. We shot 4 around and 1 at the zenith. We really should have done 1 at the nadir, but time was tight. This is generally enough information for indoor lighting situations, but definitely not nearly enough for outdoors with the sun (unless you are planning on deleting the sun and adding it in later). The more exposures, generally the better because you can cover a wider range. We shot our hdri’s with a fisheye on a t2i on a tripod with a nodal offset to make sure we limited the amount of parallax that was going on. With the camera that is shooting the footage, we shot a reference frame of a 50% grey sphere as well as a chrome ball. This helps us light later on. After we shoot the hdri’s, I then take them into Camera Raw and zero out all of the tweaks the camera makes and try to linearized them as best as possible. During this stage, I also remove some camera artifacts such as chromatic abberation. I’ve found that it’s better to do it before the stitch rather than after. After this, I convert them to .tiff’s and combine them into an HDR using Picturenaut. I use picturenaut, because it *appears* to give me the best, artifact free, result. I do have to say I am no expert here, and I came to this only after my own testing and comparing. After doing this we get something sort of like these:

3.) I then stitched these together using the program PTGui. It seems like this is the best one out there. I tried hugin and could not get it to actually do anything but crash. I also tried autopano giga and it couldn’t resolve issues like PTGui can. Autopano does seem to have better tracking abilities with finding points, but it had so many issues actually combining the images that I couldn’t use it. The result is this:

Beautiful right? Just kidding. After we exposure down we get this:

Much better.

4.) This is the hdr image that I used for the chandelier shot. As you can see there are obvious seam issues. Some things aren’t aligned correctly, distortion is a little funky. But I lined it up to my geometry and it lined up close enough. Also, there aren’t any major reflective surfaces being rendered that will show you the seams and the seams won’t effect the lighting so spending the extra time was not necessary.

5.)The next step is to create a spherical projection texture and move it to where your camera was located. Attach your hdri to it – or a small jpg version of it-for previewing purposes, and align your hdri with your geometry. If you did everything correctly, things should line up nicely. If not, go back to either the measurement or the stitching step and play around a bit more. Once I was satisfied with the position I baked the texture down to the geometry and produced these texture maps:

6.)Now the light stage! I brought in a grey sphere and moved it into the same position as the real sphere and I began tweaking the lighting so my grey sphere looks the same as the real sphere… Or as close as I can with the time given. I tweaked the textures with a combination of Photoshop work and color correct nodes in Maya. Some shots I only had to do a little tweaking, but for others, for whatever reason, I had to do a lot more extensive work with exposure and hues. For the refraction in the glasses, I needed to do something a bit special to make sure the refraction matched the color and intensity of what was behind it. To do this, I put a rayswitch before the hdri textures on the geometry and projected the actual plate into the refraction eye rays only and the hdri went into everything else. Here is a comparison between the real grey ball and the cg version:

Real:

CG:

Real:

CG:

Early Test of Characters in Scene:

The chandelier posed an interesting problem because we didn’t shoot hdri’s of the chandelier objects itself so we couldn’t accurately compute it’s luminance at all points with a texture. So Instead, I applied materials to it to mimic the real materials and tried to make it look as close as possible as the real thing using the hdr of the scene to light. I had the lighting right, so I should be able to get the chandelier looking close to the real thing and thus giving off correct light bounce. Doing it this way, also automatically gave me he correct occlusion and shadowing onto the eggs. It’s a win win!

Closing thoughts:

Since I was not using ANY lights at all to light the characters, and they were all being lit by final gather and self-illuminated geometry, I obviously came across a lot of final gather flickering. To solve these issues I used a combination of practices.

-Some scenes, all I had to do was tweak the final gather settings, and that was fine.

-There were a couple of scenes where I was able to use brute force fg and be fine.

-Most of the time, however, I needed to use importons + fg. This solved most of the issues.

-The last two shots where the worst. No matter what I did, I still had flicker. I started with render times of 20 minutes and shot through the roof to 20 HOURS a frame to try and fix the fg issue. There was no strong definite light source, and the characters ranged near and far, fast and slow. So I needed to rely on more advanced techniques such as the fg_shooter and placing portal lights in front of more important geo to help MR know where to look. I also ended up increasing the fg quality on a per material basis. This brought the render times down to 2 hours a frames. Much much better.

I didn’t touch on the materials of the eggs because it’s all pretty standard. I used fast skin SSS coupled with the MIA material for diffuse and reflections with a low IOR.

Once the hdr had been created and projected, it took, on average, about 6 hours per shot to finalize the look and lighting of the shot. All in all, I spent about two weeks working on this project from measuring to last render.

3D Total Excellence Award

Untitled Paper Dancer project

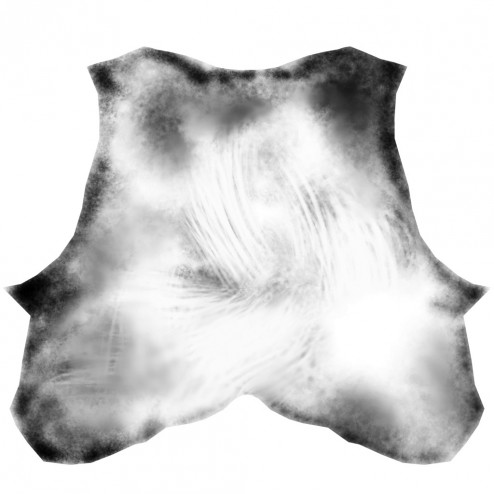

Below is an impromptu render for an in house project. This is our first project that is being rendered completely in Arnold. I figured it would be nice to get an up close render of the ole gal and see how her materials were working. Hope you all like! Click to see her in all of her full 1080P glory! 😉 I did all of the look dev, lighting, and materials.

Nw Natural little leaks eggs

Here is the latest spot that I did look dev and lighting on.

We had a little over a month from start to finish. I was responsible for gathering all of the lighting info, hdr’s, hdr stitching, projecting, previs, environment modeling, shading, lighting, and rendering.

The company I work for is amazing and we have some incredible talent in here, I’m very excited to be apart of such a dedicated and talented group.

Here’s a link to Hinge’s website for some concepty stuff:

http://hingedigital.com/projects/nw-natural-little-leaks/

Carbon Knight Write Up

Finally! (Hopefully) An enlightening behind the scenes process of the Carbon Knight spot.

For this project I used Maya and Mental Ray to render. I mulled about doing this in V-Ray or Arnold, but due to time constraints and flexibility, I ended up needing to go with Mental Ray because it has some fantastic flexibility and performance tuning options needed for this project. Let us first dig into the materials. We used the MIAX_passes material for everything. This is Mental Ray’s workhorse material and provides tons of performance tuning abilities. Adrian Grey was the artist that did most of the work on the knight. He is an incredibly talented artist.

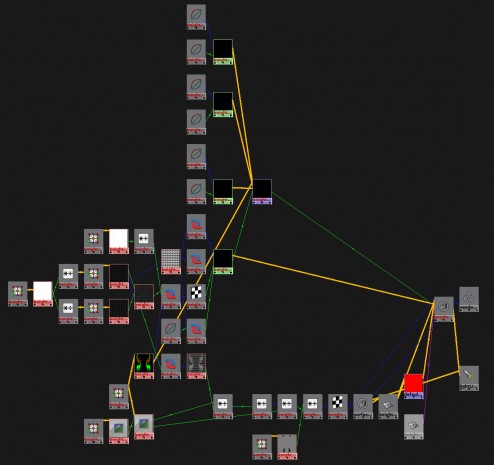

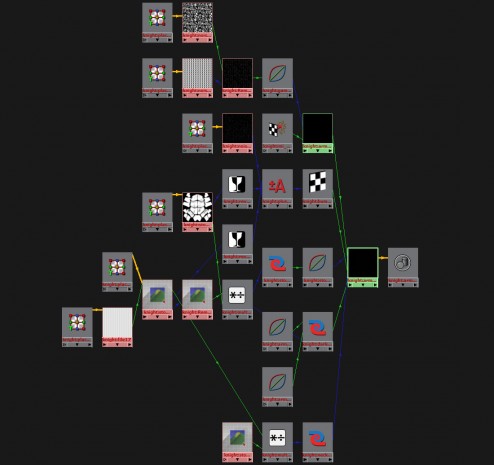

Below is the material network used on our main body material.

As you can see, the material is fairly straight forward. We used a combination of procedural textures and hand painted textures to get the desired effect. We did all of our texture painting in Mudbox, Mari, and Photoshop. Most of our textures were 4k, but some made it up to 8k. Below are some examples of what the textures looks like:

There were tons of these textures that we would layer on top of each other with multiply divide nodes. Or we would use an rgb texture to mask out different aspects of textures. We puts tons and tons of detail into this character. We never fully see a lot of the textures, but if the textures weren’t there, you would be able to notice something was off. It’s all in the details.

Our material parameters were also very straight forward. We set up our look dev scene for the knight using an outdoor hdr from hdrlabs. We included a fully white, a 50% grey, and a fully chrome sphere in the scene. That way, we insured our light levels would be balanced correctly when we were making material adjustments. This made it easy to put the knight in various lighting scenes. As long as the lighting scene was set up correctly, the knight would fit in like a glove.

We used mainly brdf curves between 1.4 and 1.8 for the materials. Sometimes we defined our own brdf curves to enable us to get a certain look we wanted. We wanted to keep our materials as physically accurate as possible, however; in the case of the armor material, we did something a little different.

Above is the material network for the armor. We wanted the armor to have these glints in it that would catch our eye, and really give the material some life. We added the mi_bump flakes, but instead of adding it in the bump slot, we added it to the the additional color slot of the MIAX material. This slightly breaks the physical accuracy of the material, but the material and light response we got from doing so was fantastic. You need to understand the rules of material properties, so you know when you can break them!

Every time we were developing the materials for this character, we always tried to think of what the character had been through previously and what sort of life the materials have had. Were they worn? Were they brand new? What sort of battles had these materials seen? This really set the stage for the look of our character and our lighting scenarios. Finding good reference was crucial at this stage. We needed to see how certain materials reacted in different light set ups so we could make sure our material properties were accurate.

The render time killer was the displacement and the glossy reflections. The displacement needed insane settings because the knight’s displaced under garment was one giant piece. We maxed out Maya’s subdiv level and had to create another one in addition to it. That’s nuts! However, 20 minutes calculation times went down to 5 minutes after fine tuning of the global and object displacement settings. They key to this project was optimizations. Optimize, optimize, optimize.

Lighting and Rendering

Here comes the reasons for why I chose Mental Ray over the other render engines for this project. I lit these scenes in two different ways. I either used custom HDRs, or geometry with an mia_light_surface material attached to it. This way, I can control how it appears in reflections and its indirect lighting contribution if I needed to.

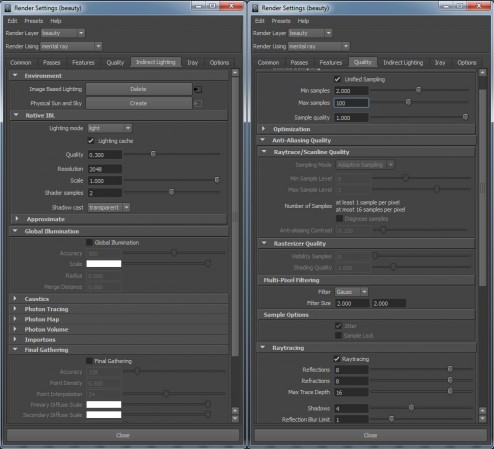

I chose to mainly light with HDRs because Mental Ray has a new importance lighting scheme built into their new native ibl in Maya 2013. This allows for beautiful shadows and light accuracy. The shadows no longer rely on only final gather, but rather on direct illumination from the HDR. In most of the shots, I did not even use final gather, or I tuned it to only interact with certain materials. This of course was more of an artistic choice than anything else to enhance the contrast in the shots; but being able to get direct lighting and shadowing from an HDR without long render times allowed us to not have to rely on final gather as we have had to do in past projects. The inclusion of unified sampling in 2013 made a monumental difference in lowering our render times and bringing out detail as opposed to the old Adaptive Aliasing settings. In most cases we were looking at render times hovering around the 30 minutes to the 1 hour mark, this is incredible when you realize that we had to subdivide the base mesh to 48 million polygons to get the displacement to be solid. Here are my render settings that I used on most shots:

I also chose to light with an HDR rather than traditional area lights because Mental Ray has this amazing little node called “mia_envblur.” The envblur allowed me to plug it into the material networks and have them intelligently blur the environment based on their glossy attributes during lookup while only having to sample the environment once. I still had to sample the self reflections multiple times, but since the HDR caused most of the reflections, I was able to more than halve our render time. Halve!! 3 hour render times — >1 hour = massive savings in render time.

To aid us in light position and material qualities I used Spraytrace. This was an invaluable tool to speed up my lighting and base material setup. It definitely struggled when I added displacement and some of the fancier networks, but it handled the rest like a champ. I was able to flesh out all of our general light setups within one week for our ten shots using Spraytrace by myself. I used different lighting for each spot. Nothing was reused in terms of light setups.

In the shots that I chose to light with geometry, I decided to do so because I wanted a little more control and to have the character be able to move in and out of the light. The cards needed much more sampling than the HDR did, and thus needed much more time to render. I decided against using area lights because it’s light and sampling distribution are very lacking and we needed something more accurate. In these shots I did need to turn on final gather or I would not get any lighting or shadows. I was able to get away with fairly low final gather solutions because everything was so dark, but I did have trouble with flicker on the arrow and fletching. To fix this, instead of cranking up the overall final gather calculation, I did so only on a material basis for those objects.

I think that covers a good chunk of what I did on the project. Hope this helps!

Carbon Knight

Here is the latest spot from Hinge. Go check out the Carbon knight!

Breakdowns coming soon!